Fatal 'Driverless' Tesla Crash Raises Questions About Company's Autopilot System

Tesla CEO Elon Musk tweets that Autopilot was not "enabled" at time of crash as federal and state investigators look into cause

As federal and state crash investigators look into how a Tesla Model S that appeared to have no one in the driver seat crashed into a tree and killed the vehicle's two occupants, Tesla CEO Elon Musk publicly stated that the vehicle's Autopilot driver assistance feature was not active at the time of the crash.

Although independent investigators have yet to determine whether Autopilot was at all a factor, safety advocates—including those at Consumer Reports—say that Tesla could be doing a better job using technology to prevent a scenario where a driver relies on the car to automate steering, acceleration, and braking functions while not paying attention, as other automakers already have done.

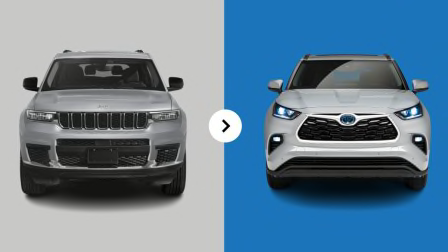

Jake Fisher, senior director of Consumer Reports’ Auto Test Center, says that no vehicle on the market is truly self-driving. He points out that in recent CR testing of driver assistance systems, Tesla's Autopilot scored near the top overall but lagged other systems in driver monitoring performance.

“While much is still unknown about this crash, I can say for sure that it was not caused by a self-driving car,” he says. ”That's because no Tesla or any other vehicle you can buy can drive itself. In our tests, Tesla’s Autopilot, even with the Full-Self Driving option, doesn’t make the car self-driving, and without adequate driving monitoring, Tesla lags behind General Motors’ Super Cruise system.”

When activated, Autopilot can automatically brake, accelerate, and keep the vehicle within a travel lane in certain situations. Another suite of features, what Tesla calls "Full Self-Driving Capability," does not turn a Tesla into a self-driving car but adds additional features, including the ability to make lane changes without driver input, and to move a vehicle out of a parking space without a driver in the vehicle.

Tesla vehicles do not currently offer any real-time driver monitoring systems to ensure a driver is in the driver seat, awake, and looking at the road when Autopilot or Full Self-Driving Capability features are activated. A Model S, like the one in the crash, looks for the force of a driver's hands on the steering wheel. If that force is not detected, the driver will encounter a series of escalating alarms up until the vehicle disables Autopilot or Full Self-Driving entirely. In contrast, GM’s Super Cruise relies on an in-car camera to determine whether the driver is looking at the road. If the driver ignores repeated warnings to pay attention, the vehicle will eventually bring itself to a stop gradually, activate the flashers, and notify emergency services. Systems from BMW, Ford, and others have similar driver monitoring systems.

In the past, Tesla owners have been known to “trick” the system meant to confirm active driver engagement by attaching a device to the steering wheel, says Kelly Funkhouser, program manager for vehicle interface testing at CR. “It’s not impossible to engage the system from the back seat," she says.

Funkhouser and Fisher tested Tesla's Autopilot and the suite of Full-Self Driving features as part of an evaluation of more than 15 driving assistance systems, and Tesla's system fell short of GM's Super Cruise system, particularly when it came to ensuring the driver stayed engaged.

Neither Autopilot nor Tesla’s Full Self-Driving Package makes a car self-driving, says Fisher. “Drivers must still watch the road and be ready to act. Any system that can automate steering and speed control needs to also make sure the driver is still looking at the road.” Tesla's website reiterates the driver's responsibility, saying that "Autopilot and Full Self-Driving Capability are intended for use with a fully attentive driver, who has their hands on the wheel and is prepared to take over at any moment." Tesla did not respond to CR's request for comment.

The National Highway Traffic Safety Administration has sent a Special Crash Investigation team to the site. “We are actively engaged with local law enforcement and Tesla to learn more about the details of the crash and will take appropriate steps when we have more information,” a spokesperson for the agency told CR in an email. Herman told CR that the National Transportation Safety Board (NTSB) also will investigate, and that investigators have requested access to the Tesla’s event data recorder, also known as a “black box,” which can offer more information about how fast the vehicle was traveling, which systems were engaged, and whether a driver was in the front seat.

"We hope to get all that through search warrants and subpoenas,” he told CR. “It would include black box data and any relevant cloud data that Tesla has."

Tesla has indicated that it uses the driver-facing in-cabin camera in its Model X and Y vehicles to monitor and ban drivers from using certain vehicle systems if the in-car cameras detect abuse, but the Model S lacks an in-car camera. Funkhouser points out that banning a driver after the fact does not stop them from abusing the system in the first place—when it could save lives.

“If there is any possibility of a driver misusing a system, auto manufacturers need to put in safeguards,” she says. “At a minimum, the systems should be able to detect if there is a human in the driver’s seat, that they are awake, and that they are looking forward.”

Joe Young, spokesman for the Insurance Institute for Highway Safety (IIHS), told CR that all vehicles that incorporate automation should include driver monitoring. But he also said that the very name “Autopilot” may mislead drivers; IIHS research showed that what automakers call or name advanced driving systems plays a big part in what drivers believe those systems are capable of. “They need to be named properly, otherwise people may think they're something they're not,” he tells CR.

Sam Abuelsamid, principal analyst at Guidehouse Insights, a market research firm, agrees. He points to videos of Tesla CEO Elon Musk doing TV interviews in moving Tesla vehicles without his hands on the wheel, videos that Musk retweeted of Tesla fans not paying attention while using Autopilot, and frequent promises that Tesla vehicles would be capable of full autonomy and used by owners as “robotaxis.”

“While the Tesla fine print says the driver is responsible and must keep hands on the wheel and eyes on the road, the actions of the CEO have repeatedly contradicted this,” he told CR. “Combined with mainstream media repeating his claims without any question and calling Teslas ‘self-driving’ or ‘autonomous’ in headlines, many ordinary people have the impression that the vehicles are more capable than they are.”

Vehicle automation experts took to Twitter in the immediate aftermath of the crash to voice their concerns about Autopilot.

Missy Cummings, a former fighter pilot who now directs the humans and autonomy lab at Duke University in Durham, N.C., called the crash “inevitable,” and suggested that simple technology could prevent the problem. “If we can sense whether there is weight in the front right seat and turn off the airbag, we can sense when no weight is in the driver seat & stop the car,” she wrote.

Carnegie Mellon professor Costa Samaras, who researches autonomy and climate change, suggested that Tesla change the name of Autopilot to avoid misleading drivers. “This tragedy was potentially avoidable. These aren't driverless vehicles. Driverless vehicles do not yet exist,” he wrote.

Last year, the NTSB warned that drivers are placing too much trust in Autopilot, and that federal regulators aren’t doing enough to make sure automakers are deploying their systems safely. In its report, the NTSB argued that Tesla has not taken adequate action to prevent drivers from abusing Autopilot, and that NHTSA—which writes and enforces vehicle safety regulations—must set standards that could prevent fatalities from happening instead of just investigating them afterward.

“The NTSB, CR, and other safety experts have been really clear about the hazards and how to address them. It’s been several years. Tesla still hasn’t put needed safeguards in place, and NHTSA hasn’t forced the company to do so,” says William Wallace, manager of safety policy at CR. “Every day without action is another day we might see a preventable crash and loss of life. It's incredibly hard to trust Tesla at this time to put safety first, so it’s especially urgent for NHTSA to take action now.”