Over the weekend, there was a horrific crash in a Tesla Model S that claimed two lives, It took firefighters over four hours to extinguish the burning car. Significantly, crash investigators are certain that nobody was in the driver’s seat at the time of the crash; the bodies were found in the passenger’s seat and the back seat. Our reporting of this story generated a lot of comments wondering why we cover Tesla crashes like these, and speculating that there’s some sort of organized vendetta against the company. This happens every time we cover a Tesla crash or a video of someone sleeping at the wheel of a Tesla using Tesla’s Level 2 semi-autonomous system, which the company calls Autopilot. I figured I may as well explain our thinking, for what it’s worth.

I should be clear in that I don’t actually think there’s a lot to be confused about with regard to why we cover Tesla crashes, but I suspect that some of our commenters may be getting tired of making the same accusations again and again in the comments, so perhaps if we just lay out all the thinking in one place it’ll be helpful for everyone.

For the wreck that happened on April 18, the fact that nobody was in the driver’s seat strongly suggests that the Tesla involved was operating in one of Tesla’s semi-autonomous modes. I’m not sure if enough of the electronics survived to confirm any of this (I sort of doubt it) but there’s not many other reasons why you’d be in a moving Tesla with the driver’s seat unoccupied.

Some comments on this article were very much in line with what we normally get when we cover a Tesla crash:

As you can see from the above comment, there are some common themes covered here: that we’re actively seeking out Tesla crashes to cover, that there are many, many other non-Tesla crashes we don’t cover, and that, somehow, our monetary compensation is tied to how many negative things we can say about Tesla.

There’s more, of course:

I think it’s hilarious that I have to say some of these things, but I guess I may as well: nobody at Jalopnik gets paid any more or less for saying anything positive or negative about any company. I once tried to see if I could get DKW to kick me a check every time I wrote about one of their cars, but they claim that since “they haven’t been in business since 1969” such a deal wouldn’t help them, which is bullshit.

Also, while I may personally get erections for any number of weird and confusing stimuli, I do not get them for “shitting on Tesla.”

The honest truth is that it would be absolute madness not to write about Tesla, the issues with their technology, or dramatic crashes like these, not because of the callow and obvious reason that they get people clicking on stories, but because there is real, genuine news involved here.

As many, many Tesla enthusiasts like to point out, Tesla is a different kind of car company, and as such they’re trying things other carmakers aren’t; they’re pushing the boundaries of automotive technology in many arenas.

I absolutely agree with this, and I think Tesla should get lots of attention for the innovative things they do and the tech they develop. But that attention should also be directed at Tesla when things go wrong as well as when they go right.

Yes, cars wreck all the time. No question, no doubt. But the reasons conventional cars crash are quite well understood, and, when they’re not, it makes sense that we should cover it.

When a carmaker’s particular technological decisions cause people to get killed, we cover it, like we did when Star Trek actor Anton Yelchin was killed in an accident with a Jeep that had a notoriously problematic shifter design, for example.

The reason we aggressively cover Tesla wrecks that appear to involve their semi-automated driving system, Autopilot, is because we’re at a fascinating transition point in automotive development, and these semi-autonomous systems are a new factor in how we as humans interact with our cars. When those systems are or appear to be a factor in a car crash, it’s our responsibility to report about it, think about it, comment about it, and evaluate if we think these technological approaches make sense.

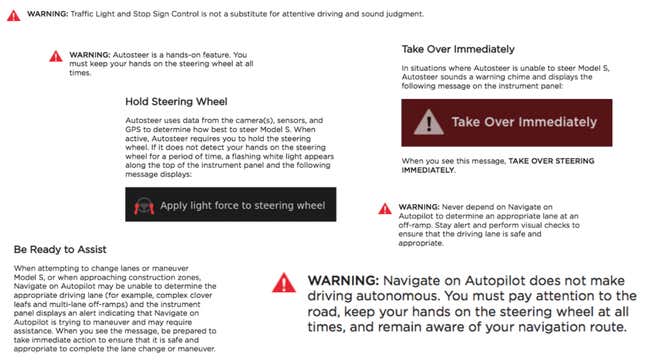

Yes, there is absolutely, no question, a human factor in all of this, too. It’s not just a technological issue. As gets pointed out in every article we write about an Autopilot crash, there are many warnings that tell drivers they have to remain alert, that the system is not actually self-driving, and on and on.

And yet, somehow, people manage to defeat Tesla’s driver monitoring systems again and again. Tesla doesn’t use a camera to monitor driver’s eyes, like GM does with their SuperCruise system, which also limits the roads their system is able to operate on.

There are many ways to defeat Tesla’s driver monitoring system, which is just a torque sensor in the steering wheel, one method you can see in this insipid video:

This high level of automation—even if it falls well short of actual self-driving—is still quite new in the automotive world, and we’re just learning how people interact with it, though we have known how people tend to act with similar vigilance systems since the 1940s, and the answer is a pretty clearly “not well.”

So, while the driver is definitely a factor here, the technology and how it’s presented is a huge factor, too. Semi-autonomous systems are not the first dangerous feature cars have that can be abused, not by a long shot. But there are differences.

Take the most obvious and basic example: speed. Pretty much every car is capable of exceeding speed limits, and there are many cars you can buy with absolutely absurd amounts of power—power that, frankly, most drivers are not equipped to handle.

As a culture, we have decided that we want to be able to have access to stupid amount of power in our cars, and we mitigate the inherent issues that presents with a comprehensive system of laws and enforcement—speed cameras, speed limits, heavy fines, license suspensions, jail time, police patrol vehicles, radar guns, insurance and so on—to attempt to keep drivers in check.

Is it irresponsible to sell a car with 700+ horsepower to any idiot? Maybe. Maybe not? I’m not really sure, but what I do know is that there are systems in place to keep excessive speeding under control (successful and otherwise) but of course we still get wrecks because of reckless speed, and we generally do not blame the automakers.

I’m not saying this is good or bad, partially because I’m conflicted. But I do know that it’s worth thinking about.

What’s different about technology that enables significant and unsafe speeds and technology that does so much driver assisting it lulls drivers into having unrealistic expectations of what the car is capable of is that no car maker is touting how fast their cars go as a revolutionary safety feature.

Dodge has never pitched the Hellcat as the best choice for safe driving, and no Hellcat owners have angrily come at me telling me how I have blood on my hands because I’m standing in the way of everyone driving 700 hp cars.

Level 2 semi-autonomous systems, though, are routinely cast this way. This is a tweet from Tesla’s Elon Musk just a couple days ago:

I think there’s a lot of issues with how this figure is computed, but that’s another article. For now, let’s just accept that it as an example of how Tesla pitches Autopilot: as a safety feature.

I do believe that Autopilot, in a number of circumstances, can enhance safety. I think it can help mitigate driver fatigue, and provide a more forgiving system where certain errors don’t have immediate and dire consequences.

But it also, inherently and by design, fosters misuse that can end up in disaster.

That’s what tweets like this one from the Tesla Owners Online group aren’t getting:

The guy in that truck is absolutely an idiot. No question. He fucked around, and found out. We don’t blame GM for the issue because GM has never made any claims that their trucks had systems that allowed for safe driving while sitting on the roof. If you try shit like this, everything goes to hell almost immediately, as you can see in the video.

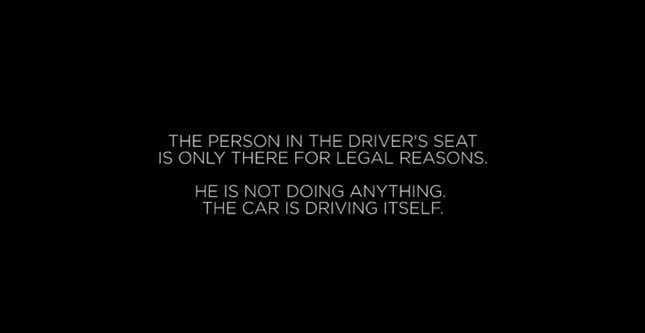

Yet Tesla has videos of their cars driving with Autopilot that include this statement at the beginning:

We’re dealing with something very different, here. When Autopilot is improperly used, it isn’t the same as a driver doing something obviously stupid in their car—it’s a driver doing something stupid that’s been at least partially and confusingly condoned by the automaker.

As journalists, we have to talk about Tesla wrecks that involve—or at least strongly appear to involve—an empty driver’s seat and Autopilot because it appears that Autopilot, a new and noteworthy technology, is a factor in the wreck. We have to cover it because a feature that is largely being promoted as a safety feature may be a significant component in why these wrecks happen in the first place.

There are many questions that deserve to be asked right now because now is when we define what our automotive future will be like. Are we okay with these sorts of wrecks in exchange for what semi-autonomy will give us?

Is safety really the motivating goal for Autopilot’s proponents? If so, why are these same people not interested in mandating full five-point harnesses for cars, or other agressive safety measures?

None of the reasons why we write about a Tesla crash have anything to do with how any writer feels about the company or Elon Musk. Because, compared to the real issues involved—the role of semi-autonomy in driving, how it works with how humans work, how we want the future of driving to go—are so much more important than how we feel about Tesla.

If it was Volkswagen or Toyota or Lotus or GM or even Changli that was deploying a semi-autonomous system onto public roads in a beta-test capacity, like Tesla, we’d write about them as rigorously, too.

Also a factor is the culture around Tesla, and the lengths this culture will go to defend the company. Take a tweet like this one, for example:

Tesla’s defenders tend to love to use the acronym “FUD,” for “fear, uncertainty, and doubt,” suggesting that someone is running an organized disinformation campaign, and that people are not responding with a very reasonable level of fear, uncertainty, and doubt that would naturally result from any car that has had crashes involving semi-automation systems.

Also, they hyperbolic analogy of the Mustang and the brick would only make sense if Ford was selling a $10,000 Mustang Brick option that they claimed would let their car just about drive itself.

The culture around Tesla itself makes Tesla crash stories more worthy of reporting because the reactions are so unusual in the automotive world—the intensity and loyalty are interesting phenomena automotive culture-wise, and form a sort of ouroboral feedback loop: Tesla crashes are reported on for many reasons, including the defensive response by Tesla fans themselves, and the resulting comments and arguments feed into the cycle, and on and on it continues.

I suppose all of this is to say that if you don’t like that we report on Tesla/Autopilot wrecks, that’s too bad. There’s simply too much at stake, too many difficult, interesting, and important questions raised by each wreck to ignore them.

These wrecks are not the same as the many other car wrecks that happen every day. If we all actually give a shit about safety and the future of autonomy, we’ll talk about these wrecks, and do our best to figure out what’s going wrong—whether the issue is technical or cultural—and what we should do about it.

And if, for some reason, this sort of coverage hurts you, offends you, makes you angry and bitter and affronted, then maybe take a moment to close your eyes, really feel the hurt, and then try to determine why focused reporting on an important innovation made by an important company is something you feel personally.

Unless you’re Elon Musk. Then I get it.

Now, if you’ll excuse me, I have a bonus check to deposit.